Why Combine Numerical Features And Text Features? Combining numerical and text features in machine learning models has become increasingly important in various applications, particularly natural...

Why Combine Numerical Features And Text Features? Combining numerical and text features in machine learning models has become increasingly important in various applications, particularly natural...

What are open-source large language models? Open-source large language models, such as GPT-3.5, are advanced AI systems designed to understand and generate human-like text based on the patterns and...

What is CountVectorizer in NLP? CountVectorizer is a text preprocessing technique commonly used in natural language processing (NLP) tasks for converting a collection of text documents into a...

Unstructured data has become increasingly prevalent in today's digital age and differs from the more traditional structured data. With the exponential growth of information on the internet, the vast...

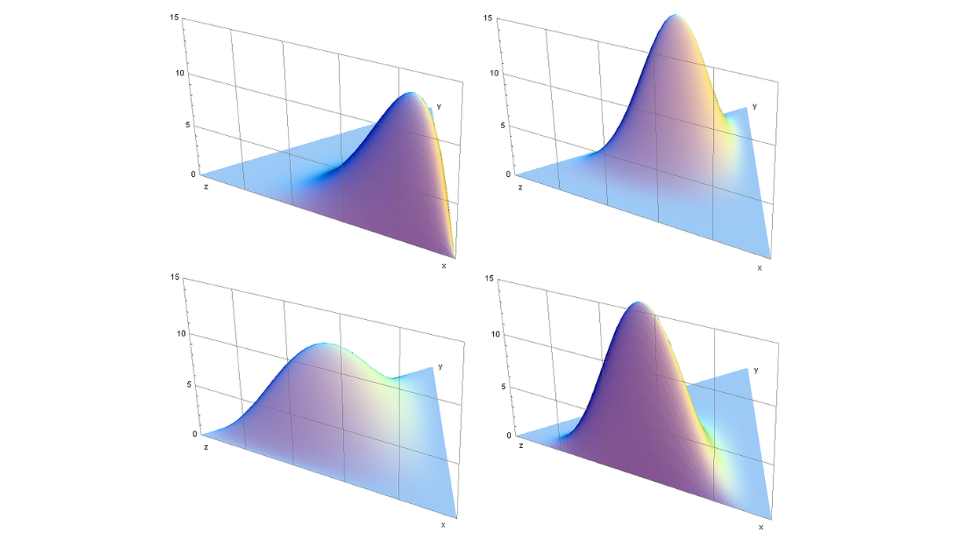

Latent Dirichlet Allocation explained Latent Dirichlet Allocation (LDA) is a statistical model used for topic modelling in natural language processing. It is a generative probabilistic model that...

What is GPT-3? GPT-3 (Generative Pre-trained Transformer 3) is a state-of-the-art language model developed by OpenAI, a leading artificial intelligence research organization. GPT-3 is a deep neural...

Natural Language Processing (NLP) has become an essential area of research and development in Artificial Intelligence (AI) in recent years. NLP models have been designed to help computers...

In natural language processing, n-grams are a contiguous sequence of n items from a given sample of text or speech. These items can be characters, words, or other units of text, and they are used to...

What is transfer learning for large language models (LLMs)? Their Advantages, disadvantages, different models available and applications in various natural language processing (NLP) tasks. Followed...

![How To Implement Natural Language Processing (NLP) Feature Engineering In Python [8 Techniques]](https://i0.wp.com/spotintelligence.com/wp-content/uploads/2023/03/nlp-feature-engineering.jpg?fit=1200%2C675&ssl=1)

Natural Language Processing (NLP) feature engineering involves transforming raw textual data into numerical features that can be input into machine learning models. Feature engineering is a crucial...

![How To Implement Data Augmentation In Python [Image & Text (NLP)]](https://i0.wp.com/spotintelligence.com/wp-content/uploads/2023/03/Ontwerp-zonder-titel-46-1-2-scaled.webp?fit=600%2C338&ssl=1)

Top 7 ways of implementing data augmentation for both images and text. With the top 3 libraries in Python to use for image processing and NLP. What is data augmentation? Data augmentation is a...

Autoencoder variations explained, common applications and their use in NLP, how to use them for anomaly detection and Python implementation in TensorFlow What is an autoencoder? An autoencoder is a...

![What Is Overfitting & Underfitting [How To Detect & Overcome In Python]](https://i0.wp.com/spotintelligence.com/wp-content/uploads/2023/02/overfitting-underfitting.jpg?fit=960%2C540&ssl=1)

Illustrated examples of overfitting and underfitting, as well as how to detect & overcome them Overfitting and underfitting are two common problems in machine learning where the model becomes...

Text classification is a fundamental problem in natural language processing (NLP) that involves categorising text data into predefined classes or categories. It can be used in many real-world...

![Tutorial TF-IDF vs Word2Vec For Text Classification [How To In Python With And Without CNN]](https://i0.wp.com/spotintelligence.com/wp-content/uploads/2023/02/word2vec-text-classification.jpg?fit=1024%2C576&ssl=1)

Word2Vec for text classification Word2Vec is a popular algorithm used for natural language processing and text classification. It is a neural network-based approach that learns distributed...

How does the Deep Belief Network algorithm work? Common applications. Is it a supervised or unsupervised learning method? And how do they compare to CNNs? And how to create an implementation in...

Reading research papers is integral to staying current and advancing in the field of NLP. Research papers are a way to share new ideas, discoveries, and innovations in NLP. They also give a more...

When does it occur? How can you recognise it? And how to adapt your network to avoid the vanishing gradient problem. What is the vanishing gradient problem? The vanishing gradient problem is a...

Get a FREE PDF with expert predictions for 2026. How will natural language processing (NLP) impact businesses? What can we expect from the state-of-the-art models?

Find out this and more by subscribing* to our NLP newsletter.