Introduction

Language is at the heart of human communication—and in today’s digital world, making sense of language at scale is more important than ever. From powering chatbots and voice assistants to analysing customer feedback and filtering spam, Natural Language Processing (NLP) enables machines to understand, interpret, and generate human language. Python has become the go-to programming language for NLP thanks to its simplicity, rich ecosystem, and wide range of dedicated libraries. Instead of reinventing the wheel, developers can use prebuilt tools and models that handle tasks like tokenisation, sentiment analysis, translation, and summarisation with just a few lines of code.

Table of Contents

In this post, we’ll explore the most popular and useful Python NLP libraries in 2025, highlight what they do best, and give you a sense of which ones to choose for different kinds of projects—whether you’re a beginner, a researcher, or building production-ready applications.

Core NLP Foundations

Before diving into specific libraries, it’s helpful to understand what NLP actually involves. At its core, NLP bridges human language and machine understanding, enabling computers to process, analyse, and even generate text in meaningful ways.

Common NLP Tasks

Some of the building blocks you’ll encounter across most libraries include:

- Tokenisation – splitting text into words, phrases, or sentences.

- Stemming & Lemmatisation – reducing words to their root form (e.g., running → run).

- Part-of-Speech (POS) Tagging – identifying grammatical roles like nouns, verbs, and adjectives.

- Named Entity Recognition (NER) – extracting entities such as names, dates, and locations.

- Sentiment Analysis – detecting emotions or opinions in text.

- Text Classification – categorising documents (e.g., spam vs. not spam).

- Word Embeddings & Vectorisation – representing words as numerical vectors for machine learning.

- Machine Translation & Summarisation – generating new text from existing content.

Why Libraries Matter

While you could implement these tasks from scratch, NLP is complex and data-hungry. Libraries provide:

- Prebuilt Algorithms – saving time on implementation.

- Pretrained Models – leveraging existing knowledge from massive datasets.

- Scalability & Efficiency – optimised code for handling large volumes of text.

- Integration with ML Pipelines – smooth compatibility with frameworks like TensorFlow and PyTorch.

In short, NLP libraries allow you to focus on solving problems, not reinventing algorithms.

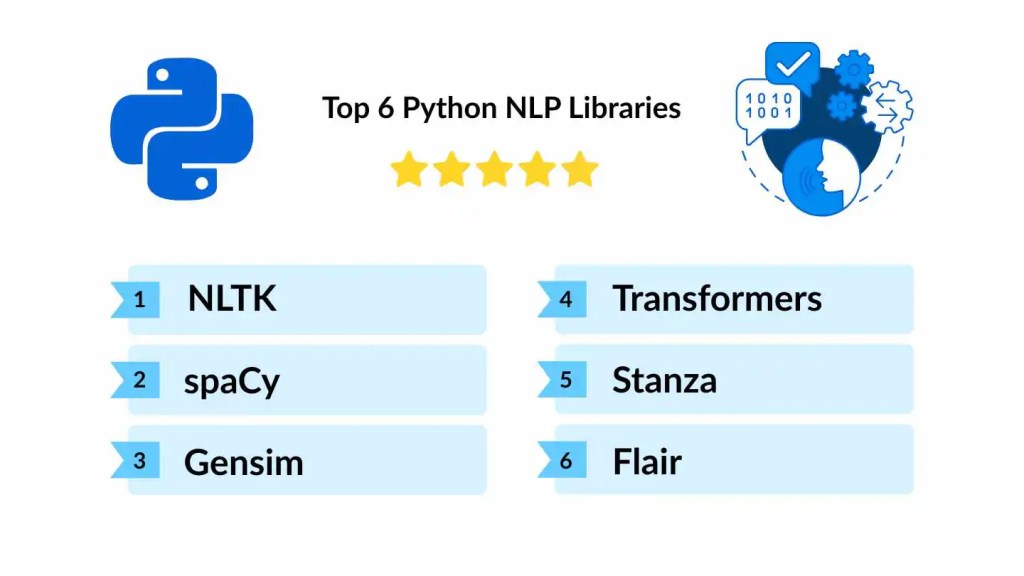

Top 6 Popular Python NLP Libraries

The Python ecosystem is rich with NLP libraries, each tailored for different needs—from foundational text processing to cutting-edge deep learning. Below are some of the most widely used libraries in 2025.

NLTK (Natural Language Toolkit)

One of the earliest and most comprehensive NLP libraries, widely used in education and research.

- Strengths: Offers tools for tokenisation, stemming, POS tagging, parsing, and corpora access.

- Limitations: Can be slower and less suited for production-scale projects compared to newer libraries.

- Best for: Learning NLP fundamentals and prototyping.

spaCy

A modern, production-ready NLP library designed for speed and scalability.

- Features: Tokenisation, NER, dependency parsing, POS tagging, pretrained pipelines for multiple languages.

- Strengths: Optimised for performance, integrates well with ML frameworks like TensorFlow and PyTorch.

- Best for: Large-scale, real-world NLP applications.

POS tagging example

Gensim

Specialised in topic modelling and similarity detection.

- Features: Implements algorithms like Word2Vec, Doc2Vec, and LDA.

- Strengths: Efficient handling of large text corpora with a streaming approach.

- Best for: Document clustering, semantic similarity, and word embeddings.

Transformers (Hugging Face)

A game-changer in NLP, offering easy access to pretrained state-of-the-art models such as BERT, GPT, and RoBERTa.

- Features: Text classification, summarisation, translation, question answering, and more with minimal code.

- Strengths: Community-driven, vast model hub, continuously updated.

- Best for: Cutting-edge NLP tasks requiring high accuracy and pretrained models.

Stanza (formerly StanfordNLP)

Developed by Stanford NLP Group, providing accurate linguistic analysis.

- Features: Tokenisation, POS tagging, dependency parsing, NER, with strong multilingual support.

- Strengths: Built on PyTorch, known for accuracy on academic benchmarks.

- Best for: Multilingual and linguistically detailed tasks.

Flair

Lightweight NLP library by Zalando Research focused on sequence labelling and embeddings.

- Features: Simple interfaces for NER, POS tagging, sentiment analysis, and easy use of different embeddings.

- Strengths: Combines word embeddings (BERT, ELMo, GloVe, etc.) seamlessly.

- Best for: Quick experimentation with embeddings and tagging tasks.

Specialised or Niche Python NLP Libraries

Beyond the mainstream NLP libraries, the Python ecosystem also offers several specialised tools designed for specific tasks or audiences. These libraries can be perfect for quick prototypes, multilingual projects, or focused NLP problems.

TextBlob

Beginner-friendly NLP library built on top of NLTK and Pattern.

- Features: Sentiment analysis, POS tagging, noun phrase extraction, translation.

- Strengths: Simple API, easy for beginners or small projects.

- Best for: Quick sentiment analysis or text processing without diving deep into NLP pipelines.

Polyglot

Multilingual NLP library supporting over 40 languages.

- Features: Tokenisation, NER, sentiment analysis, and transliteration.

- Strengths: Works well with languages other than English.

- Best for: Projects that require support for multiple languages.

fastText

Developed by Facebook AI, focused on text classification and word embeddings.

- Features: Supervised and unsupervised word representations, hierarchical softmax for fast training.

- Strengths: Extremely fast and efficient on large datasets.

- Best for: Text classification, large-scale NLP tasks, and embedding generation.

OpenNMT & Fairseq

Libraries for neural machine translation and sequence modelling.

- Features: Support for training custom translation, summarisation, and sequence-to-sequence models.

- Strengths: High-quality, production-ready translation and sequence modelling.

- Best for: Machine translation, text summarisation, and other advanced sequence tasks.

Others to Consider

- KoNLPy – Korean NLP toolkit.

- PyText – Facebook AI library for production-focused NLP pipelines.

- AllenNLP – Research-focused NLP with deep learning models.

These specialised libraries allow developers to tackle specific NLP problems more effectively, complementing the broader capabilities of libraries like spaCy or Hugging Face Transformers.

How to Choose the Right Python Library for NLP

With so many Python NLP libraries available, choosing the right one can feel overwhelming. The best choice depends on your project goals, scale, and experience level. Here’s a guide to help you decide.

Consider Your Project Type

- Educational or learning purposes:

- Libraries: NLTK, TextBlob

- Why: Simple, easy-to-understand APIs, ideal for experimenting with basic NLP concepts.

- Production-ready applications:

- Libraries: spaCy, Flair, fastText

- Why: Optimised for speed, large datasets, and integration with ML frameworks.

- Cutting-edge NLP tasks:

- Libraries: Transformers (Hugging Face), AllenNLP, Fairseq

- Why: Access to state-of-the-art models for translation, summarisation, or text generation.

Language Support

If your project requires multilingual processing, consider:

- Stanza – robust multilingual models

- Polyglot – supports over 40 languages

Task Complexity

- Simple sentiment analysis or text processing → TextBlob

- NER, dependency parsing, or tokenisation → spaCy, Stanza

- Word embeddings, semantic similarity, topic modelling → Gensim, fastText

- Advanced language understanding or generation → Transformers (Hugging Face)

Semantic Similarity

Performance Considerations

- For large-scale datasets, prioritise libraries optimised for speed: spaCy, fastText

- For research and experimentation, flexibility matters more than speed: NLTK, AllenNLP

Integration with ML Pipelines

If your NLP project involves deep learning, check compatibility:

- Transformers, Flair, AllenNLP – integrate seamlessly with PyTorch or TensorFlow

- spaCy – can be combined with machine learning models for production pipelines

Key takeaway: There’s no one-size-fits-all library. Beginners can start with NLTK or TextBlob, while advanced projects may rely on spaCy and Hugging Face Transformers. The right library balances ease of use, performance, and the complexity of your NLP tasks.

How to Implement NLP Using Python Libraries

Seeing NLP libraries in action is the best way to understand their strengths. Below are simple examples using popular Python NLP libraries.

Tokenisation with spaCy

import spacy

# Load English model

nlp = spacy.load("en_core_web_sm")

text = "Python is a great language for NLP!"

# Process text

doc = nlp(text)

# Print tokens

print([token.text for token in doc])Output:

['Python', 'is', 'a', 'great', 'language', 'for', 'NLP', '!']Use case: Splitting text into words for further analysis, like POS tagging or NER.

Sentiment Analysis with TextBlob

from textblob import TextBlob

text = "I love working with Python NLP libraries!"

blob = TextBlob(text)

print(f"Sentiment: {blob.sentiment}")Output:

Sentiment: Sentiment(polarity=0.5, subjectivity=0.6)Use case: Quickly determine if text is positive, negative, or neutral.

Named Entity Recognition (NER) with Hugging Face Transformers

from transformers import pipeline

# Load pretrained NER pipeline

ner = pipeline("ner", grouped_entities=True)

text = "Apple is looking to hire more engineers in California."

entities = ner(text)

print(entities)Output:

[{'entity_group': 'ORG', 'score': 0.99, 'word': 'Apple'},

{'entity_group': 'LOC', 'score': 0.98, 'word': 'California'}]Use case: Extracting companies, locations, and other entities from text.

Topic Modelling with Gensim

from gensim import corpora, models

documents = [

"I love programming in Python",

"Python NLP libraries are very useful",

"Machine learning can be fun"

]

# Tokenize and create dictionary

texts = [doc.lower().split() for doc in documents]

dictionary = corpora.Dictionary(texts)

corpus = [dictionary.doc2bow(text) for text in texts]

# LDA model

lda = models.LdaModel(corpus, num_topics=2, id2word=dictionary, passes=10)

# Print topics

topics = lda.print_topics()

for topic in topics:

print(topic)Use case: Discover hidden topics in a collection of documents.

Quick Comparison

| Task | Beginner | Production | Advanced |

|---|---|---|---|

| Tokenization | NLTK, TextBlob | spaCy | Transformers |

| Sentiment Analysis | TextBlob | Flair | Transformers |

| NER | NLTK | spaCy | Transformers |

| Topic Modeling | Gensim | Gensim | N/A |

| Translation | TextBlob | OpenNMT | Transformers |

Tip: Start simple, then switch to production-ready or advanced models as your project grows.

Future of Python NLP Libraries

The field of NLP is evolving at a rapid pace, and Python libraries are keeping up with the latest advances in AI and machine learning. Here are some trends shaping the future of NLP:

Integration with Large Language Models (LLMs)

- Libraries like Hugging Face Transformers now make it easy to use LLMs for tasks previously requiring specialised models.

- Expect more prebuilt pipelines for chatbots, summarisation, and content generation.

- LLMs allow zero-shot and few-shot learning, reducing the need for task-specific training data.

Multimodal NLP

- Combining text with images, audio, or video is becoming more common.

- Libraries are starting to integrate multimodal capabilities, enabling tasks like video captioning or image-text analysis.

- Future NLP pipelines will often handle text + other data types seamlessly.

Lightweight and Efficient Models

- Speed and Efficiency are critical for production use.

- Expect more smaller, optimised models that perform nearly as well as large models, suitable for edge devices or mobile apps.

Specialised and Domain-Specific NLP

- More libraries will cater to specific industries, such as healthcare, law, finance, and scientific research.

- Domain-specific models and tokenisers improve accuracy while reducing training time.

Enhanced Multilingual Support

- With globalisation, support for non-English languages will continue to grow.

- Libraries like Stanza and Polyglot will expand language coverage and accuracy.

Key takeaway: NLP libraries are moving toward greater accessibility, higher performance, and integration with advanced AI models. Staying updated with these trends ensures you’re using the right tools for both today’s and tomorrow’s NLP challenges.

Conclusion

Python’s NLP ecosystem is vast, versatile, and constantly evolving. From classic libraries like NLTK for learning, to production-ready tools like spaCy, and cutting-edge solutions like Hugging Face Transformers, there’s a library for every need and skill level.

When choosing a library, consider:

- Your project goals (learning, prototyping, production, or advanced AI tasks).

- Task complexity (tokenisation, sentiment analysis, NER, translation, or text generation).

- Performance requirements (speed, scalability, multilingual support).

The future of NLP promises even more powerful tools, with large language models, multimodal capabilities, and optimised domain-specific libraries. By exploring and experimenting with these tools today, you can unlock new possibilities in text analysis, language understanding, and AI-driven communication.

0 Comments